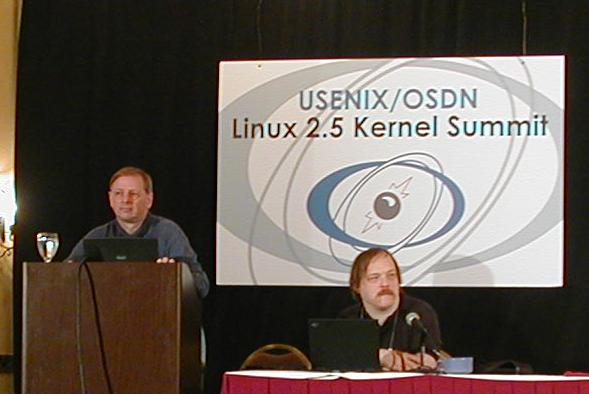

Keith Owens and Eric Raymond discuss the kernel build process

The Linux 2.5 kernel summit

Jonathan Corbet

LWN.net

April 2, 2001

The "Linux 2.5 Kernel Summit" is a two-day affair, held in San Jose, California; the organizers are Usenix and OSDN, and Ted Ts'o in particular. The purpose of this event was to get the core kernel hackers together in one place to meet each other and to look toward the imminent 2.5 development series. The attendee list shows 65 hackers, almost all of whom have names familiar to those who follow kernel development. It's an impressive gathering; more than one attendee has been heard to fret over what would happen to Linux if the big Silicon Valley earthquake were to strike during this event.

Below is coverage from Friday, not much changed since it was first posted on Saturday. Those who have seen it may want to skip down to the Friday BOF coverage or the Saturday coverage.

Check out our annotated group photo which puts names to as many people in the picture as possible.

It is worth noting that this writeup is full of "forward-looking statements." A lot of what is reported here will likely go into the 2.5 kernel, but all is subject to change until the code is actually accepted - and often even afterwards. This is a picture of how things might go; reality is certain to be different.

High-performance database requirements

The first presentation was by Lance Larsh of Oracle who, essentially, provided a laundry list of changes and features Oracle would like to see in order to get better performance out of high-end, large database servers. It comes down to the following:

The reception was generally positive - many or most of the above problems are well known and on the list to be fixed in 2.5 already. One exception was the non-preemption request, which was considered dangerous and unnecessary.

SCTP

SCTP is the "Stream Control Transmission Protocol," defined by RFC2960. It is intended to be a new, high-level protocol with many of the advantages of both TCP and UDP, and with some additional features, such as dynamic network failover for multihomed hosts. SCTP also incorporates multiple streams of messages into a single connection, which can bring performance benefits in situations where multiple streams are used.A beginning SCTP implementation was presented by La Monte Yarroll, along with a description of the changes that are needed in the kernel. Among other things, a new version of the bind() system call is needed which can bind a socket to multiple addresses, so that the failover mechanism can work.

Block layer redesign

It is generally accepted that the block I/O layer needs some major changes in the 2.5 development series. The 2.4 implementation is cleaner and faster than its predecessors, but a number of problems remain. Stephen Tweedie presented a list of things he thought needed to be addressed as a way of starting discussion on this topic. Much of his talk mirrored the list presented earlier by Oracle's Lanch Larsh, but there were a number of new items as well.The first set of problems with the current block layer have to do with scalability. The system needs to be able to handle very large numbers of devices, leading, among other things, to an exhaustion of the number of device numbers available (see also the March 29 LWN kernel page). Large memory systems need to be able to work without using bounce buffers. The current sector addressing scheme, which limits devices to 2TB, needs to be redone. 2TB may seem large, but RAID devices are already pushing that limit. There is also an issue with some SCSI devices which can report large numbers of units on each target.

Another current problem is with error reporting. If an I/O operation fails, the kernel has no way of knowing if the disk simply has a bad sector, or if the controller is on fire. As a result, a bad block will cause a drive to be removed from a RAID array, even though it is otherwise functioning fine. The system needs to provide information on just what has failed; it also needs to distinguish between read and write failures. In the case of a write failure, the data in memory is still good, and can be used by the system.

Performance issues also need to be addressed. The splitting up of large requests in the block layer is a recurring theme here. The kernel does not maintain separate queues for distinct devices; it provides all the mechanism for those queues, but it is up to the device driver to actually implement separate queues and handle the multiplexing of access to the controller. I/O scheduling fairness is also an issue at higher levels.

Then, there's a set of new features required by the kernel. I/O barriers are one such feature: a journaling filesystem, for example, needs to know that everything has been written to the journal before its associated "commit" record goes to disk. By placing a barrier, the journaling code could ensure that no operations are reordered around the commit. On SCSI disks, the barrier can be implemented with a SCSI ordered tag, meaning that here is no need to actually wait until the barrier has been reached. On IDE systems, instead, an actual "flush and wait" cycle would be required.

A similar topic is the need for explicit control of write caching in the drive itself. Disk drives typically report that a write operation is complete as soon as the data reaches the drive's internal cache, but it's often necessary to know that the data has actually reached the oxide on the disk itself. There's also a need for access to the real geometry of the drive; after years of assuming that drive geometries were complete fiction, various kernel subsystems are once again trying to find ways to lay things out optimally.

Finally, device naming came up. As hardware configurations become more dynamic, it's important to be able to track disks as they move around. The real discussion on naming came later, though...

There was surprisingly little controversy in this session, perhaps because it concentrated on goals and didn't get into actual implementation designs.

Integrating high-performance filesystems

Steve Lord of SGI presented some of the advanced features of the XFS filesystem, with the idea that, perhaps, some of them should be moved into the Linux VFS layer. The most interesting, certainly, was the "delayed write allocation" technique employed by XFS. When a process extends a file, XFS makes a note of the space that has been used, but does not actually go to the trouble of figuring out where it will live on the disk. Only when the kernel gets around to actually flushing the file data to the disk will that allocation be done. There are a couple of benefits to this scheme:The network driver API

Jamal Hadi Salim led a session describing changes to the network driver interface. The stock Linux kernel performs poorly under very heavy network loads - as the number of packets received goes up, the number of packets actually processed begins to drop, until it approaches zero in especially hostile situations. The desire, of course, is to change that.A number of problems have been indentified in the current networking stack, including:

Jamal's work (done with Robert Olsson and Alexey Kuznetsov) has focused primarily on the first two problems.

The first thing that has been done is to provide a mechanism to tell drivers that the networking load is high. The drivers should then tell their interfaces to cut back on interrupts. After all, when a heavy stream of packets is coming in, there will always be a few of them waiting in the DMA buffers, and the interrupts carry little new information.

When interrupts are off, the networking code will instead poll drivers when it is ready to accept new packets. Each interface has a quota stating how many packets will be accepted; this quota limits the packet traffic into the rest of the kernel, and distributes processing more fairly across the interfaces. If the traffic is heavy, it is entirely likely that the DMA rings for one or more drivers will overflow, since the kernel is not polling often enough. Once that happens, packets will be dropped by the interface itself (it has, after all, no place to put them). Thus the kernel need not process them at all, and they do not even cross the I/O bus.

The end result is an order of magnitude increase in the number of packets a Linux system can route. This work is clearly successful, and will likely show up in 2.5 in some form.

Hot plug devices

A common theme with modern hardware is the ability to attach and detach devices while the system is running - hot plugging. PCMCIA cards have worked that way for some time; now devices connected by USB, Firewire, SCSI, and hot swap PCI all are hot pluggable. The kernel needs to be able to deal with this kind of environment; USB maintainer Johannes Erdfelt gave a presentation on how that is being done.

Hot plugging raises a number of issues. How, for example, should a new device in the system be named? Device attributes - permissions, owner, configuration - should be preserved across plugging events when possible. Selecting the proper driver for a new device can be a challenge - sometimes there's more than one to choose from. Sometimes user space programs need to know about device events; it would be nice if the X window system could automatically work with a new USB mouse when it is plugged in.

The current scheme is to run a script - /sbin/hotplug, for every device event. It's the script's job to figure out what to do. Linus likes this approach, since it makes it easy to do the right thing most of the time.

Much of the time, however, was spent discussing the naming issue, and the associated issue of expanding the device number type. This one is going to be a big fight, perhaps one of the persistent flame wars of early 2.5 development.

The device number side actually looks easy: Linus has already stated that device numbers will go to 32 bits, with 12 for the major number and 20 for the minor. Naming is harder; the system needs to pick names for new devices that make sense to the user. Using the hotplug script to make links is one plausible approach; others include using devfs or having each device driver create its own naming filesystem which gets mounted, one way or another, when the device is attached.

There is little agreement on the proper approach; it's the devfs wars all over again. What does appear to be happening, though, is a gradual shift toward the idea that the kernel needs to dynamically export device names to user space. The final implementation may not be devfs, but a devfs-like interface kind of looks like the way of the future.

After this session, it was dinner and reception time, with beer for all.

Friday evening BOFs

Several birds-of-a-feather session were held after the reception on Friday. One involved a presentation of the Subversion source control system by Greg Stein. Subversion has the goal of replacing CVS over the next few years; it is built on top of Apache and the WebDAV protocol, and appears to have a number of nice features. The group is pushing toward a 1.0 release later this year.Linus attended the session and seemed impressed; he also commented that what he really liked was the name of the project.

Later on, Kanoj Sarcar presented his latest thoughts on the challenges presented by NUMA systems. NUMA (non-uniform memory access) machines are characterized by varying access times to memory - each processor has some "close" memory; everything else takes longer to get to. Proper memory management is critical for good performance on such systems.

Much technical discussion went by that your author didn't take down. There was also a lengthy discussion on the merits of this architecture; a number of kernel developers do not think that NUMA is the smartest possible design. As Linus pointed out, however, such machines exist and will continue to exist; Linux can either run well on them or not. It's probably best to put any personal distaste aside and deal with the situation.

The kernel build system

A number of bleary-eyed kernel hackers noted a lack of enthusiasm for a discussion on kernel makefiles first thing Saturday morning - it's just not one of the sexier topics. The presentation by Keith Owens and Eric Raymond was interesting, however. The complexity of the kernel is such that even the code for putting it all together is difficult. The kbuild system is often seen as a bit of a hack; starting in 2.5, it's likely to be a far better system.

Keith Owens and Eric Raymond discuss the kernel build process

Keith started by pointing out a number of problems with the current kbuild system. The configuration process can be long and painful - it asks far too many questions, especially for novice users. There are four different and not entirely compatible configuration mechanisms. Kernel configuration only works reliably in a top-to-bottom mode; it's hard to skip around. The makefiles are "twisted" and hard to understand. The "make dep" process for deriving file dependencies runs at the wrong time and is broken. How makefiles are run depends on how they were invoked, and can sometimes be surprising. Inter-directory dependencies do not work very well. Parallel makes can sometimes break, and do not parallelize as well as they could. The management of add-on code (such as the kdb patch) is difficult. And so on.

That is quite a bit of stuff to fix, but the kbuild hackers are on the job. The first step is to replace the configuration mechanism, generally referred to as "CML1." Back in May, 2000, Eric Raymond decided to have a look at this system, and posted the following note:

I've been examining the existing kernel configuration system, and I have about concluded that the best favor we could do everybody involved with it is to take it out behind the barn and shoot it through the head.

As was pointed out at the summit, when Eric talks that way it's best to take him literally. In this case, he has been working over much of the last year to produce CML2, a complete replacement for the current scheme. His code has been out for a bit; LWN took a look at it back in November. Eric presented the latest version, which will go out as 1.0 any day now, and pointed out some of its nicer features:

Configuration, however, is only part of the problem. Keith Owens and several other kbuild hackers are also working to replace the system that actually builds the kernel.

In the new system, the complicated Rules.make file is gone. There is, instead, a preprocessor which generates the tree of makefiles from a set of Makefile.in files. It includes its own minilanguage which makes it easy to add files - a number of single-line directives can cause specific files to be compiled when the right configuration options have been selected. It does appear to be a great simplification of the process.

The "make dep" stage is gone - dependency generation now happens as part of the regular build process. It uses a different mechanism which catches dependencies that the old code misses. There is also no separate "make modules" step; a single make builds everything that needs to be built. With the improved dependency mechanism, a kernel make can actually come back and say that nothing needs to be done; the 2.4 makefiles will always, at a minimum, link up a new kernel.

Nicely, the installation of kernels is now part of the makefile as well, making it possible to eliminate a step normally done by hand.

The new scheme also works well with add-on code. It can incorporate patches from outside the kernel tree, and can even work with read-only source trees. For people who add things to the standard kernel, the new system should be very nice to work with.

The new kbuild system is mostly implemented at this point, except for some work with dependencies. Using module versioning also currently does not work. The versioning code allows loadable modules to work with more than one kernel version, as long as the underlying interface has not changed; it is a bit of a hack and kernel developers generally avoid it. Keith asked how many developers actually use it, and got about four or five hands raised. (Interestingly, one of them was Linus, but he has said in the past that he doesn't use modules...).

When Keith asked, however, if versioning could be dropped entirely, the answer was a strong "no." Kernel hackers don't use it, but most or all distributors ship versioned kernels, and need to be able to continue doing so. So Keith needs to go back and fix up that little detail.

Virtual memory

Rik van Riel presented his thoughts on what needs to be done with virtual memory management in Linux. This talk covered a number of different areas where improvements could be made. Memory management is a complicated task, and there will always be possible improvements.

Rik van Riel

For starters, there is memory balancing, or "how do you choose which page to steal next?" The current implementation works by scanning process page tables and tossing out pages where it can. It "works OK" at this point, but is not always the most efficient way of solving the problem. Among other things, this approach can require a lot of work to free one page, and does not necessarily help free the right kinds of pages when they are needed.

One alternative could be a scheme oriented around working sets - keeping processes to a defined amount of physical memory. It's a complicated thing to implement, and runs into a number of snags: how, for example, do you account for pages that live in the file cache, and are easily shared among multiple processes?

There seems to be more interest in "reverse mapping." Currently it is not easy to find out which process (or processes) have a virtual mapping for any particular physical page. If a reverse mapping existed, page stealing could be implemented by scanning physical memory, which would make a number of things simpler. The downside is an extra eight bytes added to every single page table entry, doubling the size of the page tables. That is a heavy cost.

As Rik pointed out, though, if doubling the page table size is a heavy cost, that implies that the page tables are already too expensive. So perhaps it is time to look at ways of reducing that overhead.

One way of doing that is to go to larger pages. Many systems can handle pages that are far larger than the 4K or 8K used by Linux now. Using much larger pages will greatly reduce the number of page table entries needed. That, in turn, can reduce the number of page faults taken by a process, and also reduces the number of expensive translation buffer (TLB) misses that require a full page table lookup to be done.

Large pages are not suitable for all uses, of course - they can be tremendously wasteful of memory in their own right. So they would have to be mixed with regular, small pages to get reasonable system performance. But that brings some challenges - when it is time to bring in a large page, there needs to be a corresponding large, physically contiguous piece of memory to hold it. Large contiguous chunks of memory can be very hard to come by on virtual memory systems. So there would need to be either some sort of reservation system in place, or physical memory would have to be partitioned into large- and small-page zones.

The other way of reducing page table overhead is to allow page tables to be shared. Currently, if two processes set up a mapping to a shared memory region, they will get two separate page tables describing the same memory. In some situations - think about Oracle running many processes sharing many large memory areas - the duplicated page tables can eat up a lot of memory. Multiple page tables also make it hard to move shared memory regions out to swap.

Shared page tables are doable, but they have their own problems. The smaller one is that they require a 4MB alignment for every shared memory region. This constraint will not be a problem in most situations. The harder problem is coordinating access to the shared tables. In particular, another layer of locking would be required, leading to more complicated (and deadlock-prone) code and more expensive page faults. The locking problem is problematic enough that it may well block the implementation of shared page tables entirely.

Readahead mechanisms were discussed briefly. Linux currently has two of them: one which does straight readahead on file reads, and one which does clustering for mapped memory regions and swapping. It would be nice to combine the two; performance could be improved (and made more adaptive), and the madvise() call could be truly implemented. Better I/O clustering could also improve file caching performance, and make it easier to provide adjacent I/O requests to the block layer.

The VM system still has some problems with deadlocks in low memory situations. Occasionally it is necessary to allocate some memory in order to be able to free memory; if that allocation can not be done, things just stop. Solutions to this problem are likely to include limitations on the number of memory-freeing operations that are outstanding at any given time, and a reservation system that would set aside all of the needed memory before an attempt to free a given page is made.

Linux needs improved thrashing performance. Currently, if the system begins to thrash badly, everything just falls apart. Rik proposed a mechanism that would start suspending processes when the system begins to thrash, in order to stabilize the load. Such a system is doable and could be made reasonably fair. An interesting problem, however, is that it is evidently difficult for the system to know when it is in a thrashing state.

Some effort will also go into improving the maintainability of the current code; it could use better documentation and more consistent (and understandable) locking rules.

NSA Linux

Peter Loscocco from the National Security Agency presented the design of the mandatory access control system in its SE Linux distribution. Much of it was an overview of the system itself; the same information can be found on the NSA SE Linux site, so I'll not repeat it here. The executive summary is that SE Linux has implemented:

Perhaps the most interesting thing to come out of this discussion was the observation that there are a number of security-related projects doing similar work. All would like to see that work in the standard kernel someday. For now, however, it is very hard to know what the shape of a standard Linux high-security mechanism should really be. So the developers would really like to see the security projects get together and define a set of standard interfaces which will allow them all to hook into the kernel, so that users can experiment with more than one of them. That does not look like an easy project, don't expect to see it anytime all that soon.

Asynchronous I/O

Ben LaHaise presented his asynchronous I/O implementation. True async I/O in the kernel has been a wishlist item for a long time; it appears that it will become real, finally, in 2.5.Ben decided not to implement the POSIX async I/O scheme directly, preferring something a bit more flexible and workable. His implementation provides a new set of system calls:

The user space async I/O library provides access to the above system calls. It also falls back to the older, thread-based implementation in cases where the async operation does not work; this allows the new interface to be used transparently even when certain types of devices do not yet support asynchronous operations. Currently only raw disk I/O and filesystem I/O support can be used with the new implementation; that list will certainly grow, however.

An interesting problem that comes up with async I/O is how to signal the completion of events in the kernel. Normally this signalling is done with wait queues, but, in the async case, there is no process waiting. So Ben has augmented the wait queue code to allow for active callback functions when the queue is woken up. The callback, in this case, will arrange for the completion information to be made available to the process which initiated the operation.

There is much to do, still. The list includes documentation (of course), accounting and resource limits, expanding the range of device types which support async I/O (to network sockets, in particular), incorporating the single-copy code, and more.

Power management

Andy Grover presented the work that is being done on power management in Linux. The old APM code, of course, is obsolete - the industry is moving over to ACPI, which is a much more comprehensive standard. It is also large, the specification for ACPI fills a full 500 pages.

Intel's ACPI implementation is out there now, and is being used by FreeBSD. They are currently waiting for the 2.5 fork to submit it for Linux. As a measure of the complexity of ACPI, consider that this implementation has "5-7 person years" of development work in it already, and does not yet have support for putting systems to sleep.

Sleeping, it turns out, is a complicated task. The ACPI code must be able to find every device on the system, tell it to save its context, and power it down. This has to be done in the right order, of course; powering down a disk controller while the drives are still spinning would be a mistake.

So the ACPI folks need a general way to find all of the devices on the system, and to tell them to go to sleep (or wake up). Andy proposed a that the layout of the various, bus-specific device structures in the kernel be changed to facilitate ACPI, but a more likely approach looks to be the repurposing of the pci_device structure into a more general structure which describes any device on the system. Much of the work for that change has already been done; it will likely be finished early in 2.5.

There will also need to be a serious effort to get power management into as many device drivers as possible. Power management work is not seen as particularly "sexy," so this could take a little while.

The ACPI implementation concerns quite a few developers. ACPI actually defines its own language; system components then provide code in that language to implement a number of ACPI functions. As a result, the Linux ACPI implementation must include an interpreter for this language in the kernel - and it is huge. Relatively few people want to bloat their kernels in that way. Some people are also nervous about just what that interpreter could be programmed to do.

ACPI kind of looks like the future for many BIOS-oriented tasks, however; it is not just for suspending laptops. Increasingly, ACPI will be needed to be able to find devices and busses on the system, or to be able to work with multiprocessor systems. Power management is of great interest in the server arena as well. Anybody who has to air-condition a large room full of servers wants them to be able to shut down (and reduce power consumption and heat production) when not operating at peak load.

So ACPI is important, despite its bulk. 2.4 already has the ACPI interpreter in it, but 2.5 will be where we see a truly working implementation of this standard.

Bitkeeper

Larry McVoy had been unable to attend the BOF on source control, so he presented his Bitkeeper system in a special session at the end of Saturday. He was able to give an interesting presentation and focus on the features of Bitkeeper without getting into the licensing issues.

Larry McVoy

Bitkeeper is looking very nice. On the source control side, it provides a number of features that make it superior to the venerable CVS system. But much of the interesting work has been done on the user interface side. Dealing with the system is pleasant and easy, with tools provided which make it easy to create "changesets" and provide meaningful changelogs. There is also a nice tool for browsing the history of a system, and a "who do I blame" feature which makes it simple to find out who put in a particular change, and what other changes were made at the same time. Bitkeeper is a definite advance in the area of cooperative software development.

Larry has been trying to get kernel developers to use Bitkeeper for some time. Nobody was committing to anything at the summit, but we may yet see wider use of the system in the 2.5 development series.

Conclusion

The last segment of the summit was a discussion, let by Ted Ts'o, on release management and deadlines. Ted was going to propose that the kernel developers (and Linus, in particular) fix a specific "feature freeze" date for 2.5. That way, everybody knows when his code needs to be ready, and there is no post-freeze rush of people trying to get patches accepted.Unfortunately, I had to run out to catch my plane home. It was, in the end, easier to explain to you all why I missed this interesting discussion than to explain to my kids why I hadn't gotten back. If anybody has a summary of the discussion that they could send back to me, I would be delighted to include it in this space.

Overall, the kernel summit appears to have been a success. It is not often that this crowd gets to meet in this way, and people clearly enjoyed it. Many interesting discussions were held, and enthusiasm for the 2.5 development series appears to be high.

Group picture time at the kernel summit -

Annotated

Copyright 2001