A UAV mission over the Googleplex

Chris Anderson

The Long Tail

August 06, 2007

This weekend I was at the Google campus in Mountain View for the SciFoo scientific conference. My session was on using micro UAVs for mapping and scientific sensing, and I thought it would be fun to give a little demonstration. What better than a UAV mission over the Googleplex?

This was cool for a number of reasons. First, you may have noticed that the Google campus "satellite" view is much higher resolution than almost any other imagery on Google maps. (The way you can tell is to switch to satellite view, click on the "Link to this page" button, and then edit the URL so that "z=19" becomes "z=22", which is the maximum resolution available. Like this [ Link ].)

Suffice to say, that imagery didn't come from a satellite. Instead I happen to know it was taken by a small aircraft with 5 megapixel cameras at 600 ft.

I wanted to do better. So at the crack of dawn on Sat morn, I was on a nearby field planning my mission.

Because I didn't have a runway to take off from, I used GeoCrawler 3 [ http://diydrones.com/profiles/blog/show?id=705844%3ABlogPost%3A729 ], which can be hand launched. I wanted better imagery than the cellphone camera could provide, so I put the cellphone inside the fuselage (it would still do the GPS navigation) and strapped a digital camera to the bottom of the plane instead. I put in a few GPS lat-longs taken from Google Maps and off we flew.

It was a perfect day for flying, with virtually no wind and nobody around. I took off manually, steered the plane to north end of the campus at about 200 ft, and turned it over to the autopilot. Because I was so close I could see the plane the whole time, and truth be told I mostly did manual turning at the end of each run because we were in a built-up area and I didn't want to take the risk of missing a waypoint and losing sight of the plane, even for a few seconds. So to be accurate this wasn't a fully autonomous UAV mission, although the plane was capable of that.

The first pass was with a Pentax Optio A30, which is a small 10 megapixel digicam that supports an IR remote. I'd stuck an IR trigger board [ http://www.hexpertsystems.com/prism/ ] over the IR window on the front of the camera, connected that to my serial-to-servo board [ http://www.rentron.com/Robo-WareV2.htm ], and had the cellphone autopilot send a trigger signal to the board every two seconds (which is the fastest the camera will take pictures in remote mode). This worked great except for two things:

So it was back to the launching point for a quick change to another camera. I swapped in the Pentax W30, which has a built-in time-lapse mode and doesn't need to be IR-triggered. I knew that the camera's minimum delay interval between time-lapse shots was an interminable ten seconds, which would make mosaicing the pictures impossible, but I hoped I'd at least get a few sample shots to demonstrate the high-resolution imagery possible from these planes.

I'd previously tested the W30 from the air and found that it didn't have the same motion blur problem, for reasons that I don't quite understand (it's only a 7 megapixel, so perhaps its CCD processes the imagery faster).

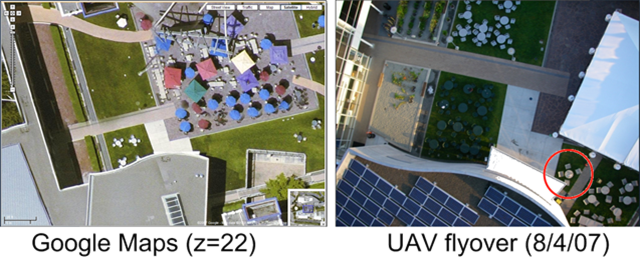

Another launch, with the same starting points and path, and a quick return to check the imagery. This looked much better, so I took it up for one final series of passes. By 7:30 am we were done, and it was time to head into breakfast at the conference and admire the shots on a laptop. Here are some samples of what we got, with the same locations at the highest resolution on Google Maps as a comparison, and my with my imagery at about the same scale.

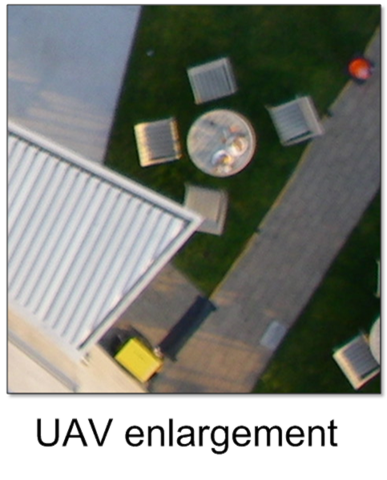

The difference between the two is that at my resolution (resolving details as small as 3 cm), I can keep zooming way in. Like this (of the circled area above right):

The advantages of the low-altitude UAV imagery go beyond the higher resolution. It's more recent, so, for example, you can see that since the imagery in Google Maps was taken, Google has put solar cells on every roof in the complex. That's great, and they deserve more credit for this. Updating their imagery to show the extent of it (see example below) would help.

You can also fact-check existing imagery. On Google maps, one of the campus's cool infinity pools has the company's logo at the bottom. In reality, it doesn't--the logo was photoshopped on:

(Real Googler's will spot the flaw in my data. What you're actually seeing in my images are the blue pool covers, not the water itself. But I walked over and lifted them up to confirm that the logo isn't there. Ground truth!)

All pretty cool. But before you get too impressed, remember that I can't tile these shots into a proper map because I don't have enough imagery. I just have loads of random shots of the campus from the air, which isn't really very useful. Next time I'm going to attempt to solve this with a Canon Digital Elph camera shooting in rapid-sequence mode, which is less than a second between shots. Canon's got some of the fastest CCD sensors and image processing chips around, so I'm optimistic that this will give me enough imagery for the photo-stitching software to take over and make a proper map. That, however, will require making a custom camera mount with a servo that can physically push the camera shutter button, because the Canons don't have IR remotes or anything else I can electronically trigger. This isn't too hard, but may take me a week or two. Stay tuned...

(You can follow all the progress on this project at diydrones.com)

Finally, a note for anybody thinking of doing the same thing--don't. I checked

this with the right people at Google, and they unofficially agreed to look the other

way because I was flying a small (under 3 lbs) electric plane that couldn't hurt

either buildings or people, and was doing the demonstration as part of scientific

conference on campus. Google has lots of 24/7 security on electric vehicles, and

you really don't want them coming after you. Plus there's those rooftop laser cannons

;-)

Copyright 2007 http://www.thelongtail.com/